您所在的位置:首页 - 科普 - 正文科普

spark基础编程期末填空

![]() 袁宜

2024-05-11

【科普】

353人已围观

袁宜

2024-05-11

【科普】

353人已围观

摘要**Title:IntroductiontoSparkProgramming**IntroductiontoSparkProgrammingIntroductiontoSparkProgramming

Title: Introduction to Spark Programming

Introduction to Spark Programming

Apache Spark is a powerful opensource distributed computing system that provides an easytouse interface for programming entire clusters with implicit data paralleli*** and fault tolerance. Spark's core abstraction is the resilient distributed dataset (RDD), which allows programmers to perform inmemory computations on large clusters in a faulttolerant manner.

Before diving into Spark programming, it's essential to understand some key concepts:

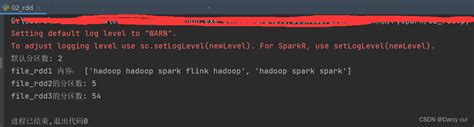

- RDD (Resilient Distributed Dataset): RDD is the fundamental data structure of Spark. It represents an immutable, distributed collection of objects that can be operated on in parallel. RDDs are faulttolerant and can be rebuilt if a partition is lost.

- Transformations: Transformations in Spark are operations that produce a new RDD from an existing one. Examples include map, filter, and reduceByKey.

- Actions: Actions are operations that trigger the execution of transformations and return results to the driver program or write data to external storage. Examples include count, collect, and saveAsTextFile.

- Spark Context: Spark Context is the entry point to Spark programming and represents the connection to a Spark cluster. It is used to create RDDs, broadcast variables, and accumulators.

- Driver Program: The driver program is the main program that defines the Spark Context and coordinates the execution of operations on the cluster.

- Cluster Manager: Spark can run on various cluster managers like Apache Mesos, Hadoop YARN, or Spark's standalone cluster manager. The cluster manager allocates resources and schedules tasks on the cluster.

Let's look at a simple example of Spark programming in Python:

```python

Import SparkContext from PySpark

from pyspark import SparkContext

Create a SparkContext

sc = SparkContext("local", "Simple App")

Create an RDD from a list of numbers

data = [1, 2, 3, 4, 5]

rdd = sc.parallelize(data)

Perform a transformation: square each number

squared_rdd = rdd.map(lambda x: x ** 2)

Perform an action: collect the squared numbers to the driver program

squared_numbers = squared_rdd.collect()

Print the squared numbers

for num in squared_numbers:

print(num)

```

In this example, we first create a SparkContext using the `SparkContext` class. Then, we create an RDD from a list of numbers using the `parallelize` method. Next, we apply a transformation `map` to square each number in the RDD. Finally, we collect the squared numbers back to the driver program using the `collect` action and print them.

When programming with Spark, consider the following best practices:

- Use Lazy Evaluation: Spark employs lazy evaluation, meaning transformations are not executed until an action is invoked. This allows Spark to optimize the execution plan.

- Minimize Data Shuffling: Data shuffling can be expensive in Spark. Try to minimize it by using appropriate transformations and partitioning techniques.

- Use Broadcast Variables and Accumulators: Broadcast variables allow efficient sharing of large readonly variables across tasks, while accumulators enable aggregating values from tasks back to the driver program.

- Monitor and Tune Performance: Monitor the performance of your Spark jobs using tools like Spark UI and optimize performance by tuning parameters like the number of partitions, memory settings, and paralleli***.

- Handle Fault Tolerance: Spark provides fault tolerance out of the box, but it's essential to understand how to handle failures gracefully and optimize recovery strategies.

Spark programming offers a scalable and efficient way to process largescale data using distributed computing. By understanding the core concepts and following best practices, developers can harness the full power of Spark to build robust and highperformance data processing applications.